AI Extention to Virtual Studio Code

During Christmas time, I did see a post on X (Twitter) where someone used the Continue extension and lmstudio as a copilot-like feature in vscode. I decided that this was something I wanted to try out. One of the features in Continue that I found interesting is that it is possible to use different models for the AI. The local model looked very interesting since you don’t need to have a subscription or something like that to use this tool.

First step - download lmstudio.ai

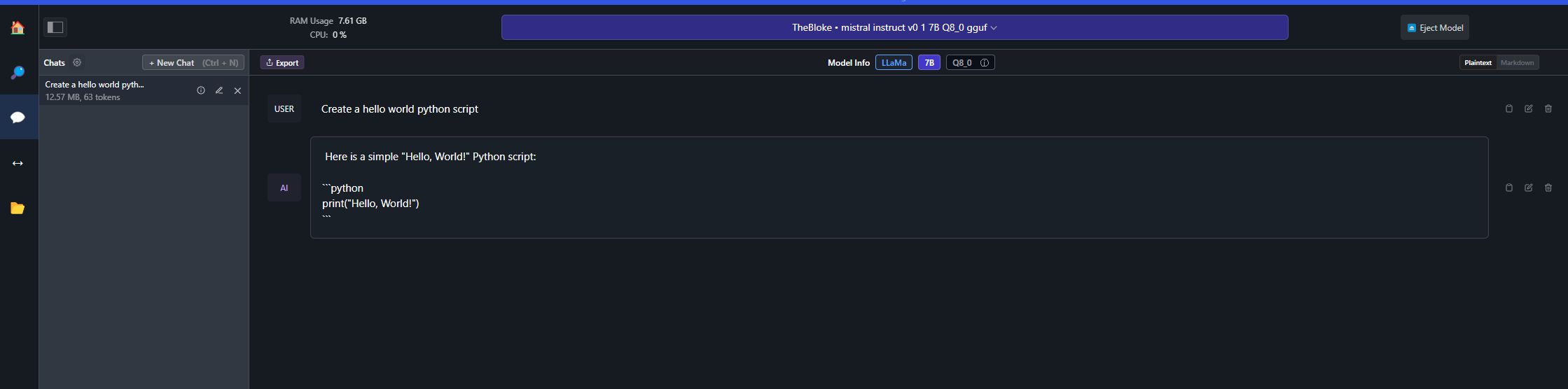

Lmstudio can be run on Windows, Linux and Mac. I downloaded it for Windows and started the program. First, I did choose a model to use. Goto search in the menu on the left side. I chose the one that was named mistral-7b-instruct-v0.1.Q8_0.gguf. I have no clue if this is a good one or not for lmstudio, but I have used Mistral for GTP4ALL earlier.

After this, I clicked on the button named AI chat in the menu on the left side to see if it was working. In the middle at the top, choose the model you downloaded and try to ask a question. Here is my result:

Second step - install continue in vscode

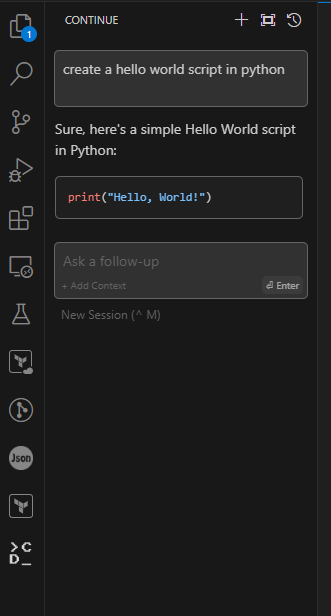

The next step is to get this to work with the Continue extension in vscode. Goto Link to continue.dev and click on VSCODE button to get the documentation for the extension. Open VScode and go to the marketplace and install the continue extension.

When it is installed you should have a new icon in the left menu in VScode. Click on the continue icon and ask it a question, for example, create a hello world python script.

Continue to use lmstudio

Continue using ChatGTP 3,5 and ChatGTP4 if you don’t do anything. But I want it to use lmstudio that I downloaded earlier.

Goto lmstudio and start the server:

Configure VSCode:

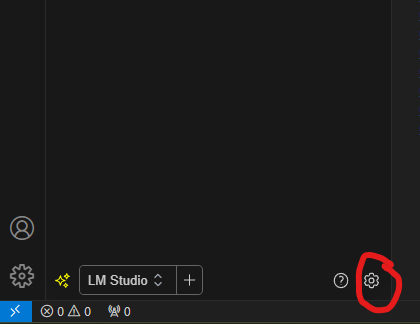

Click on the continue settings:

and add a new model under the models part in the settings file:

and add a new model under the models part in the settings file:

"models": [

{

"title": "GPT-4",

"provider": "openai-free-trial",

"model": "gpt-4"

},

{

"title": "GPT-3.5-Turbo",

"provider": "openai-free-trial",

"model": "gpt-3.5-turbo"

},

{

"title": "LM Studio",

"provider": "lmstudio",

"model": "mistral-7b-instruct-v0.1.Q8_0.gguf"

}

],

Save the config file and choose the LM Studio model like it is done in the previous picture.

Try to run the same question that was used earlier and if you get no errors and a result, then you have successfully made the copilot-like clone that you can use with vscode.

Final thoughts

I haven’t used it too much yet, but it seems like the local model is much slower compared to chatGTP. Maybe it is because of the model I choose or if it is because of the hardware I run it on. In all, it seems like a great tool to use, but it may be a little slow. Need to use it a little more and test out different models before I give it the “too slow” hammer.

A short video that showcases an example on how it can be used. There are other ways that Continue can be used (Showcasing the Continue extention in VScode with local LLM using lmstudio).

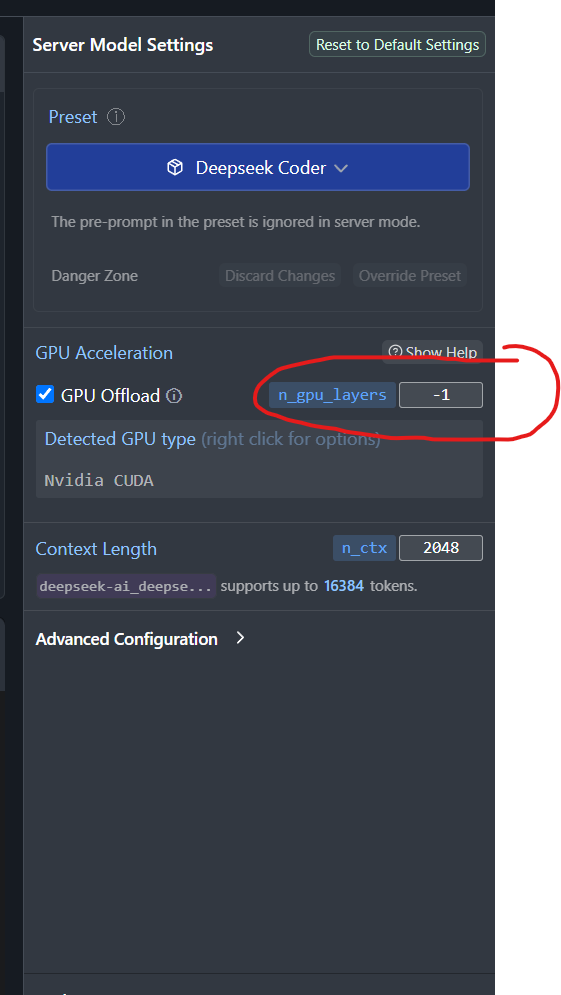

Update 1 (07.01.2024):

After some playing with the lmstudio, I discovered a setting to offload some of the load to the GPU. This setting can be found in the right side menu for both the AI chat and the Local server. If the setting is set to -1 it will offload as much as it can to the GPU.